How Much Data Is Needed for Deep Learning?

Modern businesses are increasingly turning to ML and AI-based solutions to predict and satisfy the demands of their customers more effectively. So, it’s unsurprising that the deep learning market is rapidly growing. From $49.6 billion in 2022, it’ll reach over $526 billion in 2030.

You might be wondering how much data for deep learning is required if you aim to train a high-quality model. Yet, no specialist in data annotation and processing will give an ultimate answer to this question. Why?

The required data amount depends significantly on each specific project and the problem it has to solve. If a single dataset is enough for one, then, for the other, you’ll have to collect as much information as possible.

Despite this, you can use several best practices and methods of learning bpo to determine how much data is required for deep learning beforehand, thus use object labeling outsourcing wisely. And today’s article will help you with that.

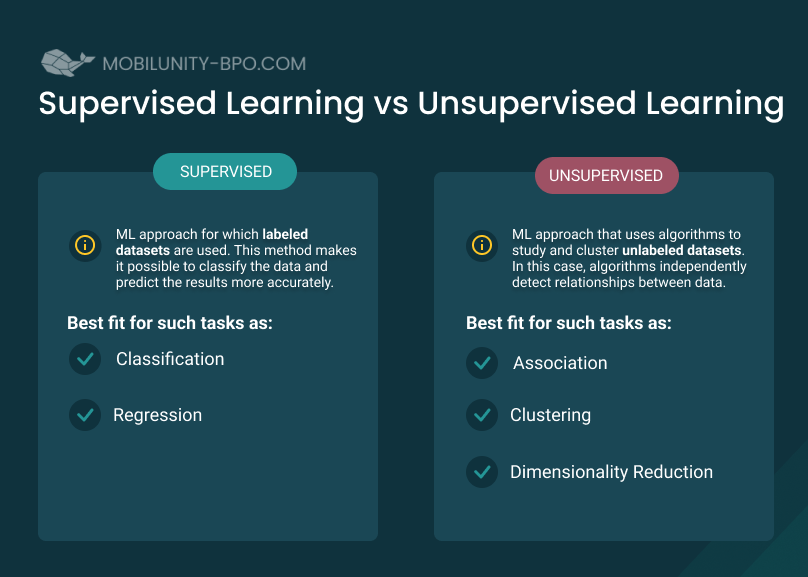

Supervised Learning vs Unsupervised Learning

Before we figure out how much data for deep learning you need, you should learn how exactly you can train a neural network. There are two approaches: supervised and unsupervised learning. Let’s discuss them in greater depth.

Supervised Learning and Labeled Data

Supervised learning is an ML approach for which labeled datasets are used. This method makes it possible to classify the data and predict the results more accurately.

Supervised learning is helpful for two types of tasks:

- Classification. Here, algorithms allow classifying data into defined categories. It can be object detection deep learning: to distinguish a car from a pedestrian.

- Regression. In this case, algorithms look for connections between dependent and independent variables. This method works well for predicting numerical values.

Unsupervised Learning and Unlabeled Data

Unsupervised learning is an approach that uses ML algorithms to study and cluster unlabeled datasets. In this case, algorithms independently detect relationships between data.

Unsupervised learning works well for the following tasks:

- Clustering. It’s a method that helps to separate some data from others based on their similarities and vice versa. There is also unsupervised hierarchical clustering when algorithms independently create clusters with predominant order.

- Dimensionality reduction. It’s an approach that helps clear out redundant features in datasets. For example, algorithms can reduce noise in images.

- Association. It’s a method that independently finds relationships between datasets.

The primary difference between supervised and unsupervised learning is AI data labeling.

Supervised learning uses human-selected, often manually prepared, datasets. It makes predictions more accurate since the algorithm is already trained on cleaned and structured data.

On the other hand, unsupervised learning algorithms train themselves and are great for processing large arrays of new data. The predictions of such models are less accurate than in the case of supervised learning, but they can help identify anomalies or non-obvious relationships.

How Much Data for Deep Learning Do You Need: Some Influencing Factors

It’s time to find out how many data points for a neural network you’ll require. Consider the following factors to determine the extent of the needed datasets:

Model Complexity

Think about what parameters your algorithm should consider. Depending on the number of points your model will process, the data amount for its training will also vary. The more different parameters, the more datasets you need.

Algorithm Complexity

Here is the same principle as in the case of models. The more complex the learning algorithm, the more data it needs to work properly.

Permissible Error Margin

If you need ML to make ultra-accurate predictions, you require a lot of training data because you have no room for mistakes. If the margin of error is wider, you’ll need fewer data.

Deep Learning Annotation Needs

The amount of data will depend on the machine learning annotation requirements. For example, teaching algorithms to distinguish pedestrians from cars will require a lot of labeled datasets. On the other hand, fewer annotated datasets are needed for your algorithm to differentiate simple shapes like triangles and circles.

Data Diversity

You’ll need to collect a lot of data if your project requires a variety of datasets to solve different problems. For example, if you’re creating an AI-powered chatbot, you want it to be able to communicate naturally with your customers.

What to Do If You Lack Labeled Data

If you don’t have enough datasets for annotation deep learning, it can cause inaccuracies in the predictions or make it impossible to train the model. But you can solve this problem in the following ways:

Transfer Learning

This approach involves the application of ready-made research results of some datasets to other similar tasks.

If, for example, you have already taught the algorithm to recognize images containing fruits, then you can use this knowledge to help the AI determine the exact types of fruits (for instance, to distinguish an apple from a banana).

Data Augmentation

Supervised or unsupervised data augmentation involves expanding a dataset by slightly changing the existing data.

This method is typical for image classification. Suggested modifications may be changing the color of the pictures, cropping, scaling them, etc. It can also be used for text content, such as translating words from one language to another and vice versa.

Synthetic Data Creation

Generating synthetic data is partially similar to augmentation with one critical difference. In this case, we do not just slightly change the existing data but create new ones with the same properties.

For example, if you are training an algorithm to distinguish apples, you need to generate images that contain apples but are different from the ones you already have.

Approximate Time and Costs of Data Annotation for AI

As previously discussed, labeled data allows for more accurate results and better training of algorithms. If you chose annotation for machine learning, then learn the approximate time and price for such data annotation outsourcing services.

As a rule, you require at least 90,000 labeled items for your model to work properly. If an annotator makes one annotation in 30 seconds, such a specialist will need about 750 hours to complete the entire task.

The price for labeling services will vary depending on the country and even the city you’re hiring. For instance, a freelance data annotation specialist earns about $20 per hour in the US. Consequently, you’ll need to pay $15,000 for 750 working hours.

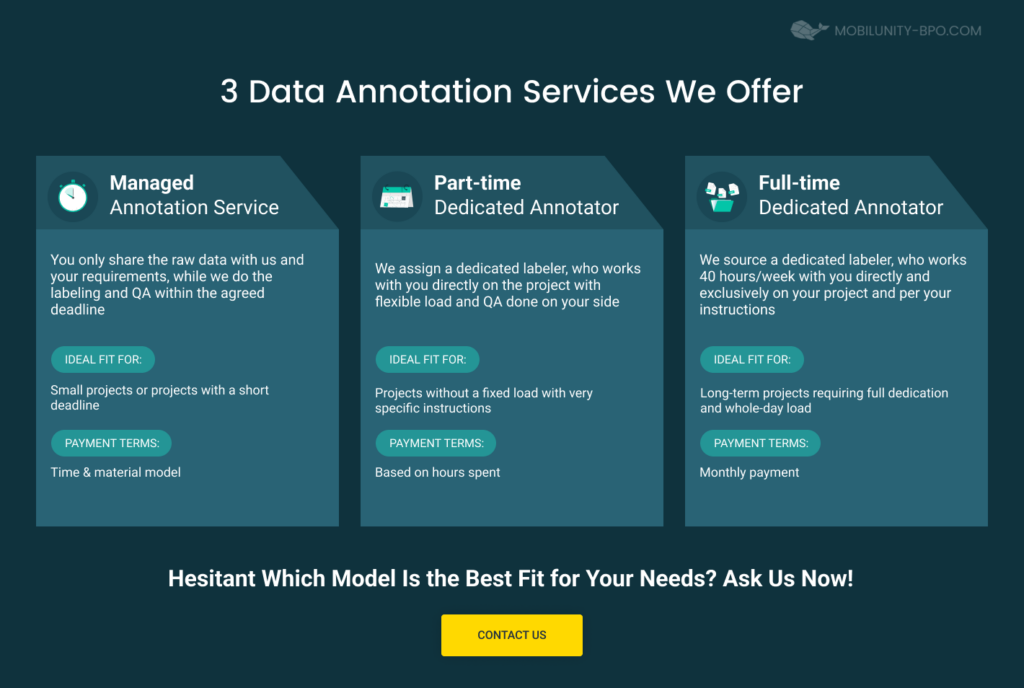

Consider Mobilunity-BPO for Data Labeling

Mobilunity-BPO is a company with over ten years of experience in the tech market and well versed in annotation for machine learning. Our dedicated specialists can be your perfect solution if you opt for data labeling outsourcing.

After seeing the high prices for annotation in the US, you’ll be delighted about the affordability of Ukrainian specialists. Additionally, our data labeling professionals are not less qualified than others in this field.

We also offer a dedicated approach to cooperation. It means that our specialists are great for long-term projects and will learn about your business like no other. Another option we present is a flexible co-op where you can hire workers for short-term tasks.

If you want to learn more about data annotation for machine learning or cooperation with us, just drop us a line. We’ll contact you and tell you more about the data labeling opportunities offered by Mobilunity-BPO.