Full Guide to Data Labeling in Machine Learning and AI

Do you enjoy the comfort of unlocking a phone with your face or driving the fastest root home offered by your voice assistant? That’s because the AI (Artificial Intelligence) algorithms behind these tasks work well.

And good AI algorithms are just like good students: both need to learn from the best teachers and study hard. That’s why Machine Learning (ML) requires high-quality training data and time. And the creation of AI training datasets starts with proper data labeling, so in this guide, we’ll tell you all about this process and help you hire professional data labelers.

What Is Data Labeling in Machine Learning?

AI algorithms ground their decisions on data labels assigned to pictures, texts, audio, or video files. And the more accurate those annotations are, the more correct predictions an ML model will make. For example, to teach Artificial Intelligence to distinguish horses and zebras, humans have to do image annotation of hundreds of pictures and feed them to the model – and do that without mistakes that can be possible with the help of the best image annotation companies. So even from this primitive example, it’s evident that the quality of the training data is essential for practical AI algorithms. However, pre-processing of information isn’t easy for a labeler as ML tasks become more specialized and complex and, thus, quite time-consuming.

According to Cognilytica research, companies spend 25% of their time solely labeling data for machine learning projects. Only its cleansing (removing duplicates and incorrect/incomplete data) requires the same time resources, followed by augmentation (15%), aggregation (10%), and identification (5%). These five data-related processes take 80% of the total time dedicated to an ML project, leaving only 20% for direct work with the AI model.

This way, not every business can manage the growing manual workload and equip themselves with in-house data labelers. And their demand for third-party AI video annotation services will only grow – together with the data labeling market that will reach $4.1 billion by 2024. That’s why so many companies offer outsourcing data preparation for projects related to Machine Learning. But before we switch to the approaches of the best to labeling various files, let’s find out more about the accuracy and quality of your dataset.

4 Factors that Affect Quality of Labeled Data

Sometimes you can see that the terms “quality” and “accuracy” are used to express the same idea. However, data quality is a complex criterion determining how reliable the information is for a specific purpose. It comprises several parameters such as accuracy, validity, completeness, timeliness, uniqueness, and consistency. So, accuracy is only a component of quality, and accurate data should be unambiguous and consistent. Now let’s check what decreases the quality of your labeled files when it comes to outsource data annotation services.

#1 When data annotators don’t know the context of your ML project or lack knowledge about the industry.

Though most of a data labeler’s time is spent on tagging that doesn’t look complicated, domain expertise can be crucial for specialized industries. Healthcare is, for example, one of the annotation spheres that call for highly-qualified human resources. Because technically, marking a dog and a tumor on an image is identical, recognizing pathologies on X-ray, MRIs (Magnetic Resonance Imaging), and CTs (Computed Tomography) requires a medical degree. And doctors usually have more important tasks than annotating data. So, when choosing a dedicated team for putting machine learning labels, you’ll need to fill it in with statements of work and probably explain specific features to your new colleagues during meetings. And, of course, you should make sure they’re qualified for doing the job.

#2 When data labelers can’t quickly adjust to additional volume, duration, or complexity of tasks.

Often, a machine learning project doesn’t assume a once-set annotating task, and unexpected issues can arise in the course of completing the initial labeling assignment. For example, your target audience needs can alter, or you’ll decide to add new products. These changes may result in increased dataset volume and more complicated tasks, which leads to extending the project’s duration. So, your annotation team (or provider) should be flexible to various changes throughout the project and be ready to scale its solutions – and you need to discuss this option before you launch.

#3 When your annotation team can’t ensure data security and compliance.

Every AI project is confidential, and it should be treated appropriately. That’s why understanding the approaches to information protection is vital for every labeler you take on board. Your teams should be ready to deal with potential data leaks and breaches, so your IT security should give clear instructions on how to act in case of accidents. Another important note is that when PII (Personally Identifiable Information) is related to your dataset, its security should be one of your main concerns. NDAs (Non-Disclosure Agreements) is a common practice that helps keep sensitive data private and secured. And the last thing we’d like to mention here is keeping information of third parties in compliance with industry standards like GDPR (General Data Protection Regulation), CCPA (California Consumer Privacy Act), and others.

#4 When your labeling staff isn’t equipped with advanced annotation tools.

When it comes to labeling data machine learning algorithms require only top-quality results. And though the process requires a human touch, this touch should be made with progressive image, audio, text, and video annotation tools. Whether it’s your in-house software, cloud-based instrument, or a hybrid customized solution, it has to meet the specific requirements of your ML project. A good labeling tool should:

- Be able to import, process, copy, sort, and combine big datasets.

- Be optimized for your tasks and annotation methods.

- Have a QC (Quality Control) module installed.

- Ensure data privacy and security through VPN, assigning viewer and editor rights to users, recording logs, etc.

So, choosing a decent instrument for your annotation team is crucial for the labeling process’s performance, quality, and speed. And now, let’s consider ways of cooperation with the team that will label data for your AI model.

5 Approaches to Labeling Data

At this point, you can ask which data labeling approach fits your Machine Learning project best – and the answer depends on several factors. These can be the volume of the dataset, the format of its files, the overall difficulty, budget, and deadlines. We’ve described five basic approaches to file annotation and mentioned their pros and cons.

#1 Employing internal data annotation department.

In-house teams are ideal for insignificant input volumes and more specialized projects. That’s because the cost of employing smaller groups is nearly the same as outsourcing expenses to process limited volumes of images, video, audio, or text. Or, dedicated departments can be arranged when the project is confidential, and only a few people should know its details. Or, if, for example, your project requires specific skills to annotate audio, it’ll be much more time-consuming to train new people than using the help of outsource video annotation specialists. As for finding professional annotators, you can delegate it to your corporate recruiters and have the team assembled within a couple of months.

#2 Outsourcing a dedicated data labeling team.

The outsourcing option is full of advantages, and here are the main ones:

-

- Free your core team for more valuable tasks. Often, in-house employees have to combine multiple duties within a project. It means that they’ll have to switch between tasks even when those could be done in parallel. And this, in its turn, will lead to delays and shifting deadlines. But with outsourced specialists, you can leverage the optimized workload. Moreover, professional data scientists will be able to focus on development and innovation rather than on redundant and time-consuming AI label putting.

- Get better work quality. Experts whose core job is file annotation can label files, texts, and objects quicker and more thoroughly. That’s because they know all the ins and outs of working with various formats and utilizing software tools. While multitasking your ML team can affect the overall performance, delegating routine work to dedicated specialists is a great alternative. Moreover, you’ll be able to get the same perfect service once you need to scale operations, as top outsourcing providers can always add more staff to label data for machine learning models.

- Remove any subjectiveness. If you grow an AI project from the very start, you tend to treat it as a child. And it’s hard to stay unbiased, having a great desire to get long-awaited output. That’s how in-house employees can unintentionally skew results and decrease the accuracy of the whole training set. In contrast, attracting third-party data annotation experts will help you get objective and high-quality results that will make your model closer to real life. You can find outsourcing companies that label data through Google Search, social media, or popular job boards.

#3 Crowdsourcing a data labeling team.

Crowdsourcing is the most cost-effective option for labeling files, and you can find freelance data annotators on multiple crowdsourcing platforms. Such platforms can provide their workforce with tools and arrange training for ML projects, so you won’t have to pay for software. In addition, freelance labelers get access to special documentation, tutorials, technical libraries, notes, etc., which help them grow professionally. This way, you can assign a team of data annotators but need to double-check the reliability of the platform, its management and communication options, and utilized tools.

#4 Using synthetic labeling.

Your ML model can be trained on actual datum (generated by real-life events) and synthetic (artificially created dataset). And synthetic data is what you get in the process of synthetic labeling. It’s usually used when you work on a brand new product or service, and actual results can’t be generated naturally. Or when you need to replace sensitive information in your actual dataset. So, there are two types of obtained training data: fully and partially synthetic. One of the most popular ways to arrange synthetic labeling is the utilization of GANs (Generative Adversarial Networks), which deliver very realistic, high-quality outputs. However, this method requires significant computing resources, and therefore, is rather expensive.

#5 Incorporating programmatic approach.

Programmed annotation uses rule-based systems that consist of labeling functions. Such functions allow annotating various objects and entities quickly and efficiently. But though this approach is quite straightforward, it’s limited by the outlined scenario. That’s why this method still requires human supervision and quality control. You can find an open-sourced programmatic tool that labeles your data or get a decent payable instrument after some research on the internet.

Here’s how you can get your dataset labeled, but what about the expenses? Read on to learn how much other ML practitioners spend on preparing their training data.

Data Labeling Techniques and Their Use Cases

In the world of machine learning and artificial intelligence (AI), data labeling plays a pivotal role. These labels, applied by data annotation services for machine learning, provide the crucial inputs that enable AI systems to learn, comprehend, and make predictions about new, unseen data. This process allows AI models to generate valuable insights from raw data. Different techniques exist for data labeling, each carrying its unique set of merits and potential applications. This article explores three primary methods: Manual, Semi-Automatic, and Automatic data labeling.

Manual Data Labeling: The Human Touch

Manual data labeling is often seen as the gold standard for data annotation in machine learning. The reason is simple: the human touch. This approach involves data annotators manually labeling data, often using a specialized annotation tool.

While this method can be time-consuming and resource-intensive, it offers unparalleled quality and precision, especially for complex tasks that require human judgment and understanding. An example might include labeling medical images where the nuances of the images might be challenging for automated methods to accurately capture.

Manual labeling is often a preferred choice for high-stakes applications, like healthcare and autonomous vehicles, where accuracy cannot be compromised. With manual data labeling, machine learning outsourcing becomes a viable strategy for businesses lacking in-house expertise.

Semi-Automatic Data Labeling: The Best of Both Worlds

Semi-automatic data labeling represents a hybrid approach, combining the accuracy of human judgment with the efficiency of AI. Here, AI is used to pre-label the data, following which human annotators review and correct the labels as needed. This technique effectively reduces the time and effort required in the labeling process, without compromising the quality of the output.

A use case for semi-automatic data labeling could be sentiment analysis, where AI pre-labels text data as positive, negative, or neutral, with human annotators refining these labels based on the nuances of the language. For organizations looking to outsource annotation machine learning tasks, semi-automatic data labeling presents an appealing balance of cost, speed, and accuracy.

Automatic Data Labeling: AI Training AI

In the automatic data labeling approach, AI annotation is entirely responsible for the labeling process. Automated algorithms assign labels to data based on predefined rules or patterns, with minimal human intervention. Automatic labeling is especially efficient when dealing with large volumes of data and simple, repetitive tasks.

A prime example of automatic data labeling use is in e-commerce, where millions of products can be automatically labeled into categories based on their descriptions and images. As machine learning continues to evolve, so does the sophistication of automatic labeling, driving increased adoption of this technique.

However, despite its speed and cost-efficiency, automatic labeling lacks the nuanced understanding that human annotators bring, making it less suitable for complex tasks. Thus, companies considering machine learning outsource should evaluate their specific needs and the nature of their data before choosing an appropriate data labeling technique.

In conclusion, the choice between manual, semi-automatic, and automatic data labeling hinges on factors such as data complexity, resource availability, budget constraints, and the specific requirements of the AI or machine learning project. By understanding the strengths and limitations of each technique, businesses can make an informed decision and maximize the value derived from their data.

Benefits of Outsourced Labeling Data for Machine Learning

Machine learning (ML) is revolutionizing various industries, from healthcare and finance to manufacturing and marketing. However, the success of any machine learning algorithm depends on the quality and quantity of the data used to train it. Labelling data for machine learning is a critical step in the development of ML models, as it involves annotating raw data with appropriate labels to enable the algorithm to learn patterns and make predictions. Outsourcing data labelling services has become an increasingly popular option for businesses looking to save time, resources, and ensure high-quality labelled data for their ML projects. In this article, we’ll explore the benefits of outsourcing labelling data for machine learning and discuss why outsource data annotation can be advantageous for your business.

Access to Expertise and Specialized Skills

Labelling data for machine learning requires specialized skills and a deep understanding of the specific requirements of various ML models. By outsourcing machine learning data labeling, you gain access to a team of experts with extensive experience in ML labeling datasets for machine learning across various domains, such as image recognition, natural language processing, and audio labelling. These professionals can ensure that your data is accurately and consistently labelled, ultimately improving the performance of your ML models.

Cost-Effectiveness

Building an in-house team for machine learning label data can be expensive, as it involves hiring and training specialized personnel, investing in infrastructure, and maintaining a dedicated workspace. Outsourcing data labelling services allows you to significantly reduce these costs, as you only pay for the services you require. Additionally, outsourcing can help you avoid the costs associated with employee turnover and training, as the data labelling services provider will be responsible for managing their team of experts.

Scalability and Flexibility

As your ML projects grow in size and complexity, your data labelling needs may evolve. Outsourcing label data in machine learning enables you to scale your data labelling efforts easily, as the service provider can quickly adjust their workforce and resources to accommodate your changing requirements. This flexibility allows you to manage fluctuations in your data labelling workload without compromising on quality or turnaround time.

Quality Control and Data Consistency

Ensuring the quality and consistency of labeled data for machine learning is crucial for the success of your ML models. Outsourcing ML labelling to a professional data labelling services provider allows you to benefit from their quality control processes, which typically involve multiple layers of review and validation to ensure the highest level of accuracy and consistency. This rigorous approach to quality control can help minimize errors and improve the overall performance of your machine learning algorithms.

Faster Turnaround Times

Outsourcing data labeling for machine learning can result in faster turnaround times, as the service provider can dedicate a team of experts to work on your project full-time. This dedicated workforce can complete large-scale data labelling tasks more efficiently than an in-house team, enabling you to speed up the development and deployment of your ML models.

Access to Advanced Tools and Technologies

Data labelling services providers typically utilize cutting-edge tools and technologies to streamline the labelling process and ensure the highest level of accuracy. By outsourcing data labeling ML, you can benefit from these advanced tools without having to invest in them yourself or dedicate time to mastering their intricacies. This access to state-of-the-art technologies can improve the overall quality and efficiency of your data labelling efforts, ultimately leading to better ML model performance.

Focus on Core Business Activities

Outsourcing labelling data for machine learning allows you and your team to focus on core business activities, such as product development, sales, and customer service. By delegating the time-consuming and specialized task of data labelling to a dedicated team of experts, you can free up your in-house resources to concentrate on other essential tasks.

Cost of Labeling Data for Machine Learning

To understand what stands behind the price for annotation services, you need to understand how labeling is implemented. Most often, you’ll get a fixed price for an image classification task. However, the price will depend on the video duration and the number of words in the text you need to process. For example, you’ll be asked to pay for every 10 seconds of a video file or every 100 processed words in a text. If you need to identify objects on an image, you’ll probably get a quote for every bounding box or segment. The same will apply to videos, and you’ll pay for every tracked object. For entity marking in the text annotation assignments, you’ll be charged per number of words and number of extracted entities. But you’ll never want to get a dataset with low accuracy, so hiring one person who labels data (even of the same format) isn’t reliable. And this leads us to the main cost driver – number of labelers required per one type of task.

If one person marks only part of the object, another one will create a too big box, the third will identify the wrong object, and only the fourth will complete the required task – how many people should you engage? Usually, this number was calculated based on the allowed budget and required data quality, resulting in something like “the more, the better.” However, there is a smarter and more cost-effective approach to labeling your dataset – active learning. This AI algorithm assumes that not every object will have the same effect on the ML model, and thus, it can identify the most impactful input criteria. However, you’ll still have to decide on the number of labelers to assign but will be able to approach the most critical data first.

Choosing Your Data Labelling Provider: 10 Questions to Ask

We’ve collected the basic questions that you need to ask your data labeling provider before starting cooperation. But firstly we recommend to watch this video, in which experts shed light on the key considerations when seeking data annotation services.

These insights complement the set of questions below, ensuring that businesses have a comprehensive understanding of what to look for in a provider:

What software do you use? Is this platform suitable for completing my tasks? Does it let me monitor and manage all annotation tasks?

Professional data annotators use advanced labeling tools that will fit your ML/AI project requirements. Top software solutions allow tracking, reporting, quality assurance (QA), and other features, so controlling your annotation team shouldn’t be an issue.

How will I train my new team? And how long will it take?

Data labeling providers usually assign annotation specialists who have experience in your domain. That’s why highlighting the AI project’s context through documentation, and several meetings are usually enough to start working on a new task. However, for more specialized spheres like healthcare or agriculture, training can take more time.

How do you measure the quality of machine learning labeled data? Do you offer a sample review? And what if this quality won’t fit my project requirements?

There are several ways to measure your annotation team’s performance. Productivity can, for example, be assessed based on the quality, volume, and engagement of your workforce. Every provider has its own approach to classifying errors and assessing accuracy, and a sample review should be standard practice.

How will the communication with my new team be organized? Will they be responsive to my requests and provide feedback as I ask questions?

When it goes about your product or customer experience, getting timely responses from your labeling team can be crucial when it comes to outsource text annotation services. That’s why setting the right communication channel and schedule between your Machine Learning and annotation teams will help you stay proactive and responsive to your clients’ needs.

Is my information securely stored? How can I be sure that only authorized users access it? Can we sign NDAs with every data labeler?

Protecting the client’s information is one of the prerogatives of a professional outsourcing provider. Modern image, audio, video, and text annotation tools are equipped with access-setting features that help to control corporate data. And to secure confidentiality to a client, top data labeling providers will sign non-disclosure agreements.

Will the sensitive information be treated in compliance with current privacy regulations? Will the PII in my raw dataset remain confidential?

Most of the advanced data annotation software boasts features that help your company keep the personal information from your dataset private and compliant with GDPR, CCPA, and other regulations. So, you won’t have to install any add-ons or use extra tools to follow the data privacy regulations.

How much will I have to pay? What does the price depend on?

Prices depend on the datasets’ volumes, the number of engaged labelers, and annotations, and there won’t be a single option for every case. The cheapest assignment will be a classification task, and the most expensive involves object identification.

Will you be able to meet my deadlines? And what if data labelers will need additional time to complete the project? What’s the best team size for my project?

The manager of your annotation team should be able to estimate time frames and human resources required to label specific volumes of data. And possible reasons for shifting deadlines need to be discussed and stated in a contract before the team starts working on your dataset.

How will you deliver the results? Can I choose the format?

You should be able to choose the format of the output data. Whether it be Excel, XML, CSV, JSON, or others, the extension type isn’t an issue for any labeled data machine learning output.

What if I need to change the volume or labeling approach in the middle of the project? Will my team be flexible to meet new requirements?

A client-oriented provider should be able to meet the changing requirements of its customer. So, the possibility to engage additional data labelers or commit more time to the project.

Mobilunity-BPO Is Your Reliable AI Data Labeling Provider

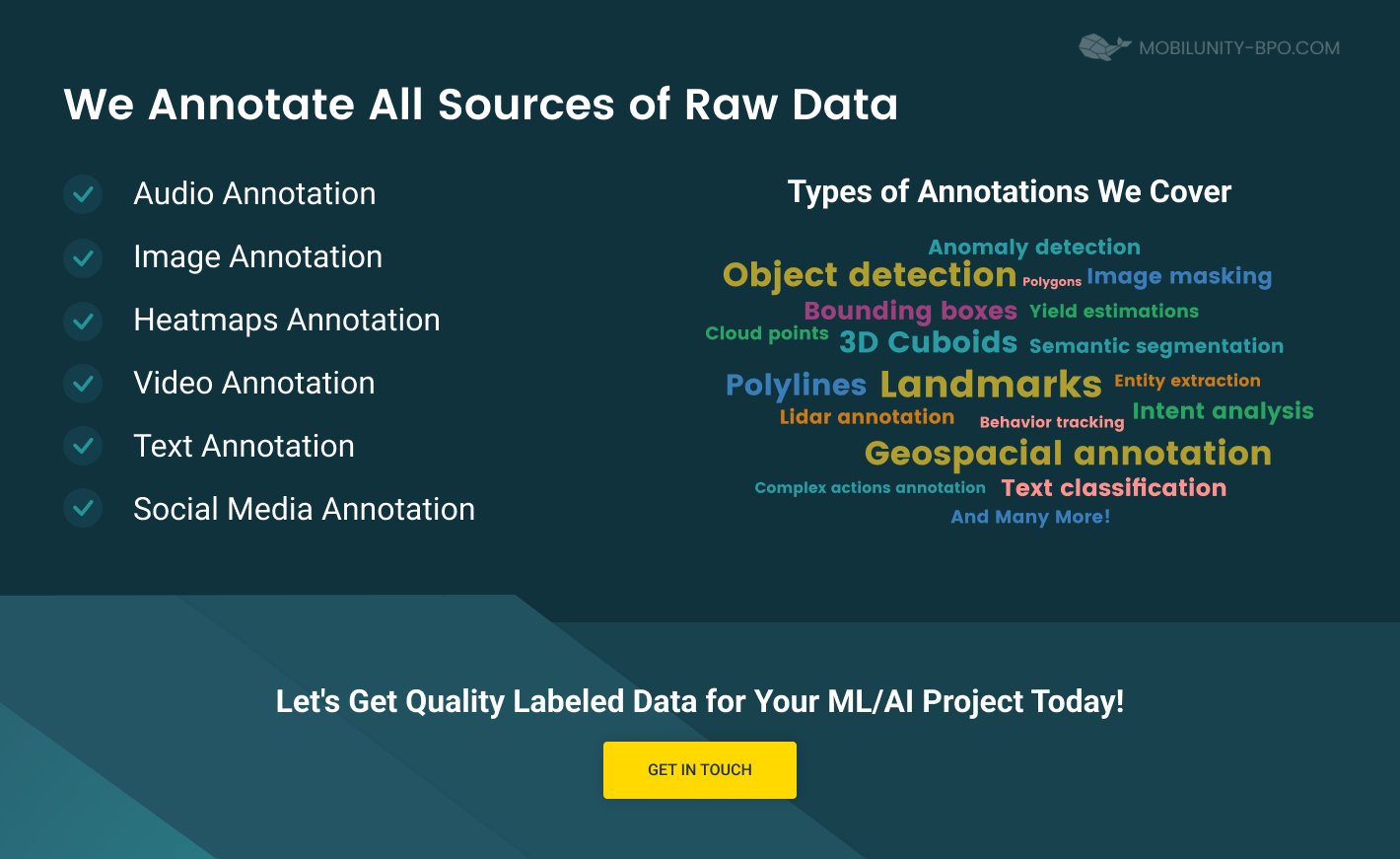

We’ve been hiring top professionals for multiple businesses, building dedicated customer support, recruiting, marketing, and BizDev teams for over a decade. And our expertise in forming dedicated machine learning label teams is also vast. With us, you can benefit from highly-skilled Ukrainian data annotators and have your AI model trained within the set deadlines and budgets. Here are the tasks that we can help you complete:

- Image labeling

- Video annotation

- Audio labeling

- Text annotation

- Data anonymization

- Chatbot training

And here’s how Mobilunity-BPO assembles your Ukraine-based data annotation team:

- You complete the contact form below and discuss all the details when our Sales Manager gets in touch (typically within 2 hours).

- We create an ideal candidate profile, and once you confirm the job description and other relevant details, we start the searching process.

- During the next 2-3 weeks, we arrange pre-screening and conduct interviews with our recruiters to assess if each candidate is a good match for your business.

- After 4-5 weeks, you can take a look at the shortlist of top data labelers. We’ll send you 3-5 CVs with short descriptions and brief recommendations on each potential team member. Please note that for larger groups, we sometimes require more time.

- You choose the best data labeling specialists, and we send them job offers once you give us the final confirmation.

- We issue an invoice that doesn’t include recruiting fees – you only pay for your new team’s annotation services.

That’s how Mobilunity-BPO has become the time-tested and globally recognized outsourcing provider for 40+ clients. Start hiring your data labeler from a reliable outsourcing provider right now!